A RAG Journey That Redefined Collaboration

It’s been a while since my last article, and for good reason—I’ve been deep in the trenches, working on the production implementation of a Retrieval-Augmented Generation (RAG) solution for a client. Our primary focus was ensuring enterprise-grade security and scalability. Yet, as is often the case with technology projects, the most impactful outcome wasn’t the one we had anticipated. Instead, it was an unexpected transformation in how staff collaborated across teams.

The original idea for this RAG was straightforward: help answer customer queries more effectively, assist internal staff in handling emails efficiently, and ensure that responses were drawn exclusively from published materials. This approach not only streamlined workflows but also safeguarded the company from any accidental disclosure of sensitive insider information. Well, the story doesn’t end there.

The Road to Enterprise-Readiness

Our client had a Proof of Concept (PoC) in place, but transforming it into a robust, production-ready solution required significant effort. To accelerate the process, we utilised an open-source accelerator and leaned heavily on Azure Services, minimising custom code to ensure scalability and maintainability. However, achieving enterprise readiness was no small feat. Here’s how we approached the challenge.

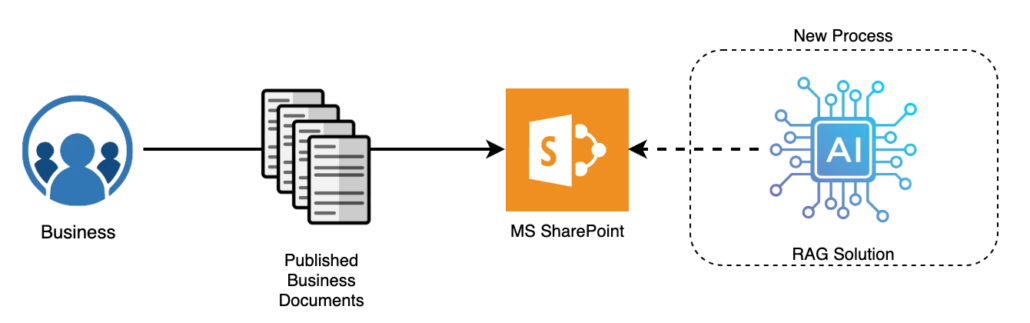

Integrating with Business Processes

The client had not started implementing its data management strategy, and any solution we delivered had to fit seamlessly into their existing processes to avoid resistance. We worked closely with their teams to craft a business-friendly data management strategy that balanced usability with rigour.

The client already had a well-defined process for publishing authoritative documents, which we leveraged to streamline RAG ingestion. By slightly adjusting this process and incorporating their SharePoint services, we introduced a new set of properties and an Azure function. These enhancements ensured the data was business-ready and optimised for the RAG to learn from effectively.

Layering Security Features

From the outset, security was a cornerstone of the RAG implementation uplift. We re-engineered and built a comprehensive security framework that included document-level and blob storage safeguards, robust identity management protocols, and reinforced network protections. These measures were not just add-ons but foundational to the design and development process, ensuring that enterprise-grade security standards were upheld at every stage.

Scaling for data ingestion and response generation in AI introduces unique challenges, but our proactive security strategy tackled these complexities directly. By embedding security into the very fabric of the solution, we ensured a scalable, resilient, and secure RAG implementation, capable of addressing both current and future enterprise needs.

Testing for Excellence

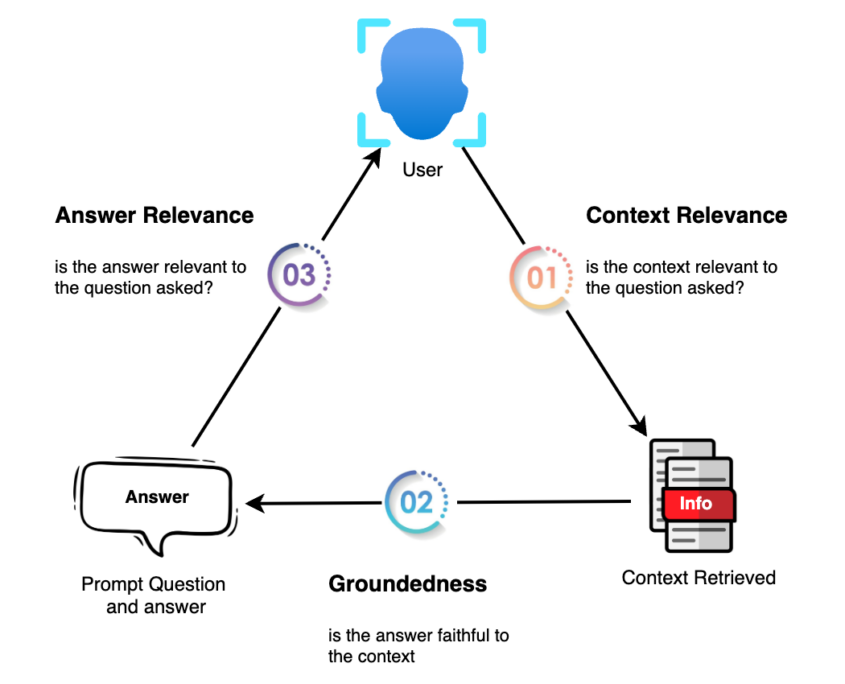

To ensure the RAG system consistently delivered high-quality results, we introduced the RAG Triad framework, focusing on Context Relevance, Groundedness, and Answer Correctness. This custom Marlo solution acted as a quality assurance process for the future of AI, safeguarding against any degradation in outcomes during each iteration of the release cycle.

Testing in the AI domain requires heightened attention to subtle nuances. Unlike traditional systems, the impact of overlooked errors can be harder to detect but equally critical. This makes the testing phase indispensable, demanding rigorous analysis and triage whenever results show degradation in any of the tested dimensions. By placing additional focus on this phase, we reinforced the reliability of the system and laid the foundation for a successful production release.

Leveraging Azure AI Services

To ensure the solution remained enterprise-ready and scalable, we harnessed the full power of Azure AI services like AI Search, Document Intelligence and AI foundry. These tools introduced advanced capabilities such as AI safety mechanisms to identify potential risks, prevent jailbreaking attempts, and promote responsible chatbot behaviour.

By embedding these features, we not only reinforced the security and compliance of the RAG system but also ensured it adhered to the highest standards of ethical AI usage, giving the client confidence in its long-term reliability.

Building for Evolution

From the outset, we approached the RAG solution with a long-term vision, anticipating the evolving needs of the client’s business. Designing for adaptability, we implemented features like history tracking and user feedback loops, ensuring the system could learn and improve with every interaction. These capabilities not only provided immediate value but also set the foundation for continuous enhancement, aligning the solution with the dynamic nature of the client’s operations.

By the project’s conclusion, the client had a self-managed, Level 1 RAG that was robust enough to address current challenges while being flexible enough to grow alongside their business. With these features in place, the RAG didn’t just meet the client’s immediate needs—it became a scalable tool for innovation, capable of tackling future complexities with ease.

The Unexpected Outcome

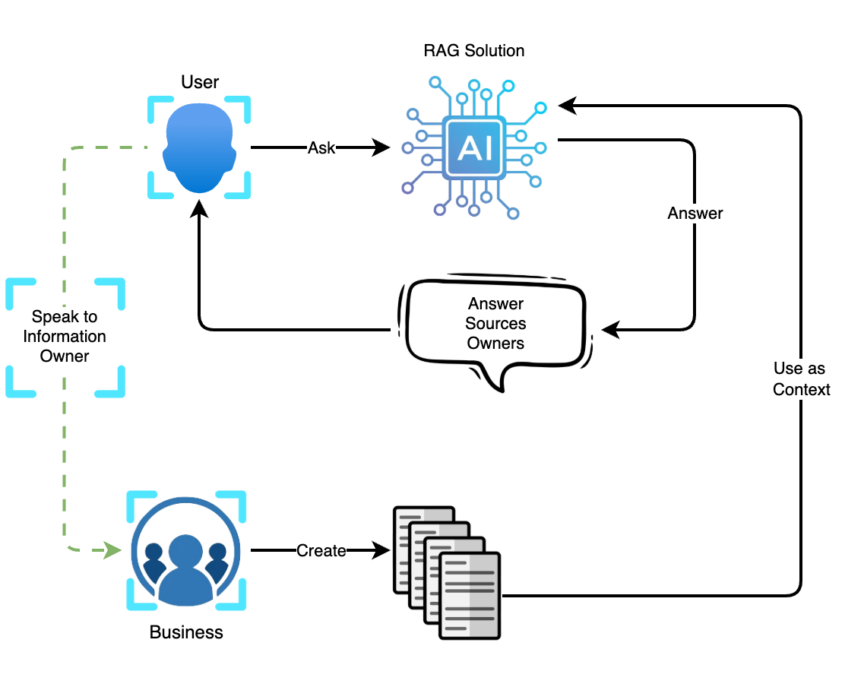

While the initial goal of the RAG was to assist staff in handling external queries, its real value became evident post-deployment.

The AI’s capability to retrieve contextually relevant information and point users to authoritative sources triggered a significant shift in internal staff behaviour. Instead of remaining siloed, the solution encouraged teams to begin reaching out to the appropriate departments to gain deeper insights. This unexpected transformation fostered a culture of collaboration that surpassed initial expectations. What started as a simple AI chatbot became a powerful enabler of cross-departmental communication.

Additionally, the implementation of this solution catalysed a broader maturity in document and knowledge management. The importance of proper labelling and data organisation became immediately apparent, paving the way for more efficient information sharing across the organisation.

What started as a tool for answering external queries evolved into a catalyst for internal innovation, driving both operational improvements and cultural change.

The Marlo Group

The Marlo Group